Can we compare the classification of man-made objects to that of biological life? If so, what should a “screw” be compared to? A species, a genus, a family, or even a class in the domain of life? This question may not seem very meaningful, or falsifiable at first glance, however, a quantitative comparison between the two disparate “domains” could be worthwhile. From a data-driven perspective, we can for example draw an analogy between the taxonomy of Amazon products across all niches, and the existing systems of classification for living organisms. Both trees are well-documented and digitally accessible.

Vaslav Smil in “The Diversity and Unification of the Engineered World” draws a quick parallel between the taxonomy of the domain of life and the classification of man-made objects. He claims that in terms of “species” count, the diversity of man-made objects has already surpassed that of biological life. The former on the rise, the latter in decline – since the dawn of civilization. Now if these two domains and their inverse trends are not vis-à-vis mapped, they are for sure connected to one another:

Diversity is moving from the tree of life to objects.

Does it mean that in terms of innovation our technological species has surpassed “nature”? No, we are nature. And this trend is nature at play moving from a geological, to a biological, to a technological planet. And all of these are driven by a notion of evolution of the winner code against the selection pressures of an environment similar to, but beyond biology.

Evolutionary sciences outside biology sporadically exist, although not as formalized and well-established as the theories of biological evolution which have been elaborated since Charles Darwin. Nevertheless, from a more “Lovelockian” perspective, it is worthwhile to zoom out of the conventional borders of biology, and project the inferred rules of evolution beyond the 150-years-old tradition.

The competitive exclusion principle

According to the competitive exclusion principle (CEP) in ecology, two species competing over the same resource cannot coexist forever; One will eventually leave the scene to the other. We can see this in the economy with the establishment of a monopoly by the tech giants, taking up all the resources serving a specific aspect of human life. With a stretch, we can also generalize this principle in a different direction by classifying man-made objects into kingdoms and species just like plants and animals. In other words, we can look at both taxonomies, life and objects, as two terrestrial families competing over a single resource, our planet. It will appear that man-made objects are taking the playground from the organic life.

And with the transfer of resources, shifts also, diversity.

The modern explosion of manufactured objects whilst the decline of biodiversity should be viewed as the tale of a shift; Diversity has gradually moved from the tree of life to the product-space.

So far, this looks obvious. But there’s a catch! And it is about us, humans as the catalyst of this shift.

It appears that we humans can be seen as a domain which not only caused the forementioned shift but also hosted it for a short while. In short, we took it from life, had it to ourselves for a geological moment, and are now giving it to objects, in an emergent process that may not even be in our control.

Shift of diversity from life to objects, via humans?

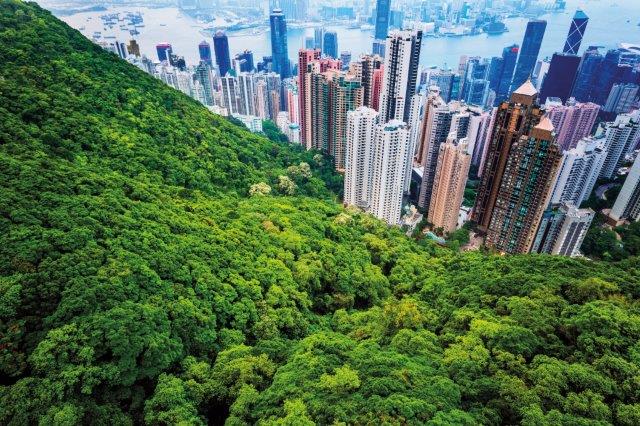

As buildings seize more lands from trees and drones will take more of the sky away from the birds, we need to pay attention that in between these two, animals and artifacts, there was an intermediate domain for hosting diversity which rose and fell: human culture, as the carrier of novelty.

A one-step more detailed story could go like this: One species out of millions unexpectedly dominated the earth and while pushing the rest to die or adapt, diversified itself. It gave rise to an explosion of isolated cultures, languages, and lifestyles; A diversity unseen in any species beforehand. The cycle however did not stop there. Millennia later one or few of those many cultures – under the industrial civilization – eventually pushed all the rest aside and sucked the diversity into its own territory, producing physical artifacts. And this is where we are now.

Clearly, many of these cultures didn’t completely die but they were marginalized and can still, at least partially be found in some uncontacted tribes or in the corners of research institutes. But so are nearly extinct animals in zoos and labs. You get the point.

Now, why does keeping track of where novelty shifts matter? Well, because nature may as well repeat the cycle again. Let’s just extrapolate what would happen if evolution, in this era of novelty which is the age of man-made products, favors yet another front-runner and picks one or a few of these numerous by-products and eventually rules out the rest from the “mainland” to marginalized “islands”. This will be similar to what we humans did to other species, by placing a handful of wild and not-domesticated animals in our zoos while driving the rest to extinction. And also similar to what modern cultures did to the old ones, killing them off or putting fractions of them on academic shelves or in museums. Each winner code seems to set a new agenda and eventually dictate the next primary carrier of diversity.

“Regimes” of life, based on the notion of diversity

Geologic timescale is conventionally divided into eons, eras, periods, epochs, and ages. The consensus does not even yet allow concepts such as Anthropocene to have a formal place within this geologic timeline. It is, therefore, quite a stretch to redefine this timescale based on a single species. I suggest, as an alternative, we define a geological “regime” – for the lack of a better term – based on the domain of diversity. This will serve us more than just a descriptive model as it can hold some predictive power and can give some alternative understanding of the past and the future of life on earth. So let us try to retell the story of terrestrial life based upon the notion of diversity:

1. The regime of cell diversity: What we call life, is the explosion of life forms all based on very specific cell types. But it is suggested that prior to the Darwinian Threshold there must have been a diverse community of cells that evolved as biological units. The Last Universal Common Ancestor (LUCA), although an already complex organism with a DNA-based genome, is not thought to be the first life on earth, but only one of many early organisms, all of their progeny having become extinct. This means that during the transition of the prebiotic earth to the time of LUCA there had existed a diverse pool of biological entities, at some point perhaps many different cell architectures, from which only these main types survived and ended up colonizing the earth, up until now.

2. The regime of biodiversity (mono-cell): We could think of the whole Phanerozoic Eon as an era of biodiversity based on just eukaryote and prokaryote cells as robust building blocks survived out of all the other extinct cell types. This seems to began even earlier, towards the end of the previous eon, Proterozoic, with the abundance of soft-bodied multicellular organisms, but certainly during the Cambrian explosion, life gave the diversity to species. Diversification of animals, plants, and fungi during this period reached a level that resembles the variety of life today.

3. The regime cultural diversity (mono-species): The regime of biodiversity can span the whole current eon up until the Anthropocene. Paleozoic, Mesozoic, and Cenozoic eras with all their periods and epochs, can be commonly characterized as different eras of biodiversity. Throughout these times life has repeatedly witnessed attempts of a single species rising to colonize the earth and eventually falling. Until now, however, it seems that homo sapiens has reached a certain breakthrough unprecedented in previous species. Our ancestors and a handful of our domesticated species have now for some time taken the diversity from other species. During this period, humans have formed thousands of religions, languages, and isolated cultures.

4. The regime of product diversity (mono-culture): What we are experiencing now is the rise of a “monoculture” regime within the timeline of our civilization. This period can be defined with the dominance of the industrial civilization which has marginalized the rest of the survived cultures, while many more of them have long been extinct. In terms of diversity, we can view this phase of civilization as a regime of life on earth. This regime that all of a sudden is serving as a platform for the modern and diverse pool of man-made objects.

5. The regime of mono-artifact: And the point of all these re-classification is the horizons it can show us, with the virtue of extrapolation: What’s next? We can read the same old story once again.

Is it conceivable that one or a few objects, out of the current myriad of man-made artifacts and products, dominates the existing technological diversity? In this simplified narrative, after eukaryotic/prokaryotic cell types, human genome made of that particular cell type, the industrial civilization made by that particular genome, a certain greedy object made by that particular culture could indeed take the crown from the other objects and redefine the future of Earth’s ecosystems. What would that object be? My blind guess is that it is more likely to be in the category of AI codes run on silicon chips, than to be a hair-drier. But who knows?

And even if we find a handful of “winner object” that could colonize the earth, the next question is, with the rise of that new platform, what would be the next territory that shall win nature’s focus to experiment with its innovation and host the future diversity?

We are probably as unaware of those forms of inorganic future life, as the singular cells were about trilobites. But we can expect that something unimaginably crazy is plausible.

And non of these claims would mean that we, as the current front-runners of organic living organisms, are going to die and fully give up the playground to an exotic form of inorganic life. We, humans, need forests and wildlife to sustain our biological bodies. So in order to run their greedy codes of reproduction and dominance, the mysterious winner objects are also likely to depend on our flesh, which depends on Earth’s life support systems. If successful at this transformation, we will be brutally domesticated. If not, we will go extinct and this spark will die off. Nature’s tide sloshes back all the way to the regime of biodiversity, until maybe a new spark brings about the next geological explosion.